PromSec: Prompt Optimization for Secure Generation of Functional Source Code with Large Language Models (LLMs)

Problem: LLMs tend to generate functional but insecure code.

Objective: Optimize prompts to generate secure functional code with LLMs.

Key Contributions:

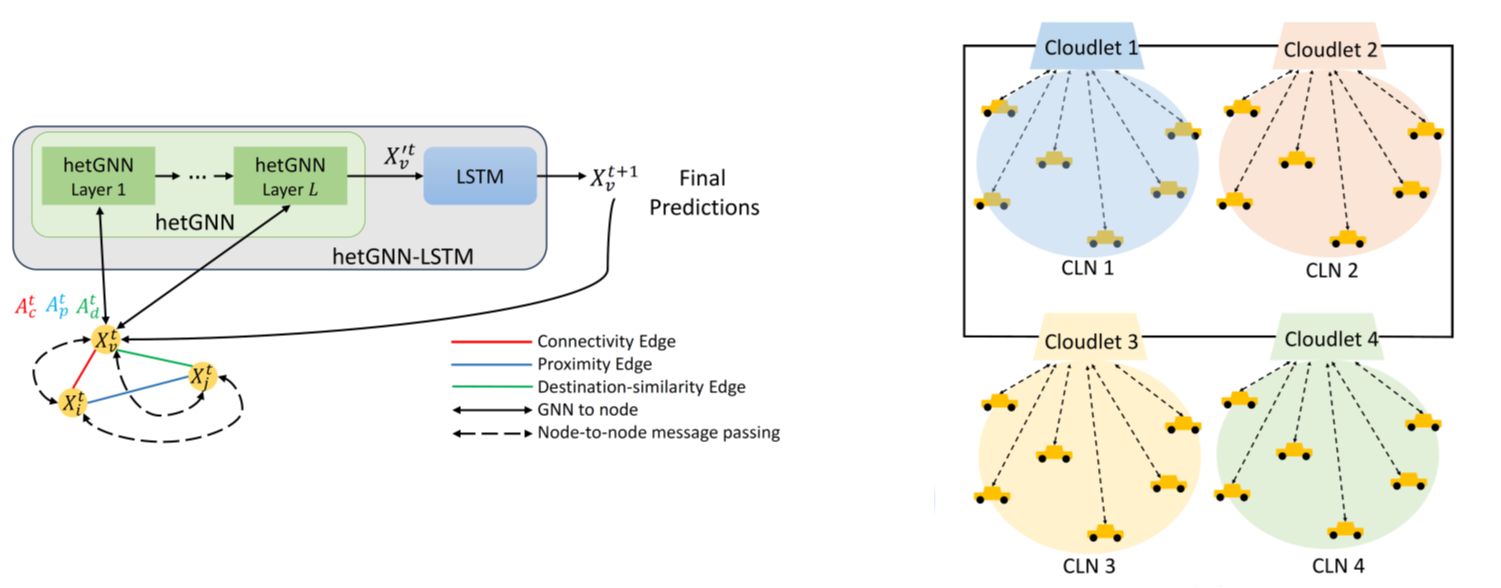

- Graph generative adversarial network (gGAN) + LLM loop to reduce vulnerabilities and maintain functionality.

- Contrastive learning for dual-objective optimization.

- Transferable prompts across LLMs and vulnerabilities.

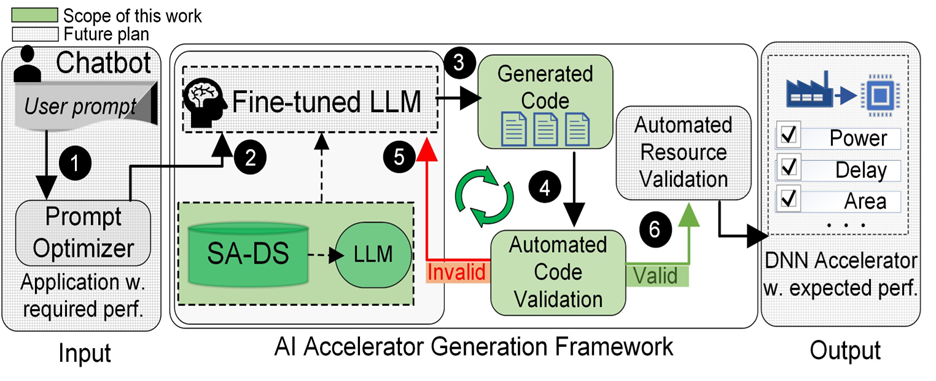

Methodology: gGAN fixes code vulnerabilities, LLM improves prompts, and iterative loop for optimizing code security.

Key Results: Enhances security while maintaining functionality, reduces operational time and costs significantly, prompts work across languages and LLMs, reduces vulnerabilities in generated code.

Publications:

- [1] M. Nazzal, I. Khalil, A. Khreishah, and N.H. Phan, “PromSec: Prompt Optimization for Secure Generation of Functional Source Code with Large Language Models (LLMs),” in 31st ACM Conference on Computer and Communications Security (CCS 2024), Salt Lake City, UT, USA, Oct. 2024. Arxiv Preprint | Artifacts

- [2] T.K. Ton, N. Nguyen, M. Nazzal, A. Khreishah, C. Borcea, N.H. Phan, R. Jin, I. Khalil, and Y. Shen, “Demo: SGCode: A Flexible Prompt-Optimizing System for Secure Generation of Code,” 31st ACM Conference on Computer and Communications Security (CCS 2024), Salt Lake City, UT, USA, Oct. 2024. Preprint | Demo Website

- [3] M. Nazzal, I. Khalil, A. Khreishah, and N.H. Phan, “Method and System for Prompt Optimization for Secure Generation of Functional Source Code with Large Language Models,” US Patent, Application No.: US63/561,573, Washington, D.C., USA, Filing date: Mar. 5, 2024.